Boiling is not just for heating up dinner. It’s also for cooling things down. Turning liquid into gas removes energy from hot surfaces, and keeps everything from nuclear power plants to powerful computer chips from overheating. But when surfaces grow too hot, they might experience what’s called a boiling crisis.

In a boiling crisis, bubbles form quickly, and before they detach from the heated surface, they cling together, establishing a vapor layer that insulates the surface from the cooling fluid above. Temperatures rise even faster and can cause catastrophe. Operators would like to predict such failures, and new research offers insight into the phenomenon using high-speed infrared cameras and machine learning.

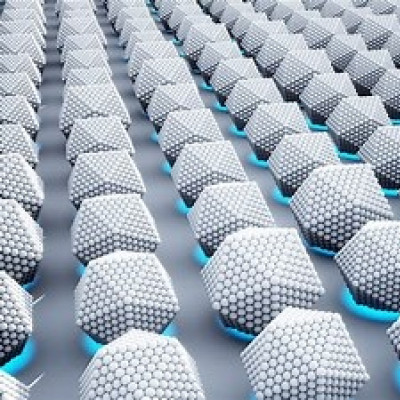

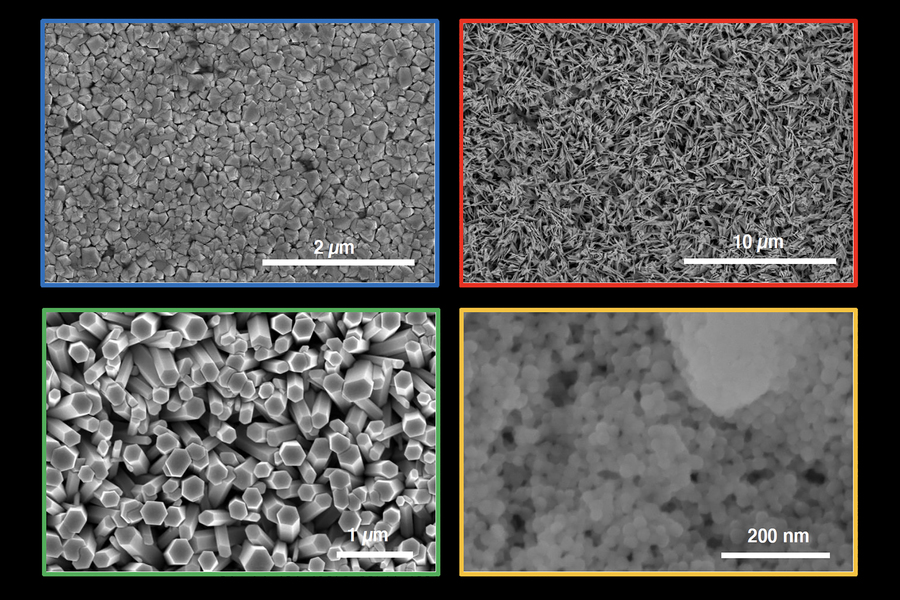

Pictures of the boiling surfaces taken using a scanning electron microscope: Indium tin oxide (top left), copper oxide nanoleaves (top right), zinc oxide nanowires (bottom left), and porous coating of silicon dioxide nanoparticles obtained by layer-by-layer deposition (bottom right).

Matteo Bucci, the Norman C. Rasmussen Assistant Professor of Nuclear Science and Engineering at MIT, led the new work, published June 23 in Applied Physics Letters. In previous research, his team spent almost five years developing a technique in which machine learning could streamline relevant image processing. In the experimental setup for both projects, a transparent heater 2 centimeters across sits below a bath of water. An infrared camera sits below the heater, pointed up and recording at 2,500 frames per second with a resolution of about 0.1 millimeter. Previously, people studying the videos would have to manually count the bubbles and measure their characteristics, but Bucci trained a neural network to do the chore, cutting a three-week process to about five seconds. “Then we said, ‘Let’s see if other than just processing the data we can actually learn something from an artificial intelligence,’” Bucci says.

The goal was to estimate how close the water was to a boiling crisis. The system looked at 17 factors provided by the image-processing AI: the “nucleation site density” (the number of sites per unit area where bubbles regularly grow on the heated surface), as well as, for each video frame, the mean infrared radiation at those sites and 15 other statistics about the distribution of radiation around those sites, including how they’re changing over time. Manually finding a formula that correctly weighs all those factors would present a daunting challenge. But “artificial intelligence is not limited by the speed or data-handling capacity of our brain,” Bucci says. Further, “machine learning is not biased” by our preconceived hypotheses about boiling.

To collect data, they boiled water on a surface of indium tin oxide, by itself or with one of three coatings: copper oxide nanoleaves, zinc oxide nanowires, or layers of silicon dioxide nanoparticles. They trained a neural network on 85 percent of the data from the first three surfaces, then tested it on 15 percent of the data of those conditions plus the data from the fourth surface, to see how well it could generalize to new conditions. According to one metric, it was 96 percent accurate, even though it hadn’t been trained on all the surfaces. “Our model was not just memorizing features,” Bucci says. “That’s a typical issue in machine learning. We’re capable of extrapolating predictions to a different surface.”

The team also found that all 17 factors contributed significantly to prediction accuracy (though some more than others). Further, instead of treating the model as a black box that used 17 factors in unknown ways, they identified three intermediate factors that explained the phenomenon: nucleation site density, bubble size (which was calculated from eight of the 17 factors), and the product of growth time and bubble departure frequency (which was calculated from 12 of the 17 factors). Bucci says models in the literature often use only one factor, but this work shows that we need to consider many, and their interactions. “This is a big deal.”

“This is great,” says Rishi Raj, an associate professor at the Indian Institute of Technology at Patna, who was not involved in the work. “Boiling has such complicated physics.” It involves at least two phases of matter, and many factors contributing to a chaotic system. “It’s been almost impossible, despite at least 50 years of extensive research on this topic, to develop a predictive model,” Raj says. “It makes a lot of sense to us the new tools of machine learning.”

Researchers have debated the mechanisms behind the boiling crisis. Does it result solely from phenomena at the heating surface, or also from distant fluid dynamics? This work suggests surface phenomena are enough to forecast the event.

Predicting proximity to the boiling crisis doesn’t only increase safety. It also improves efficiency. By monitoring conditions in real-time, a system could push chips or reactors to their limits without throttling them or building unnecessary cooling hardware. It’s like a Ferrari on a track, Bucci says: “You want to unleash the power of the engine.”

In the meantime, Bucci hopes to integrate his diagnostic system into a feedback loop that can control heat transfer, thus automating future experiments, allowing the system to test hypotheses and collect new data. “The idea is really to push the button and come back to the lab once the experiment is finished.” Is he worried about losing his job to a machine? “We’ll just spend more time thinking, not doing operations that can be automated,” he says. In any case: “It’s about raising the bar. It’s not about losing the job.”

Read the original article on Massachusetts Institute of Technology (MIT).