As robotic devices such as artificial prosthetics and human-computer interfaces are increasingly integrated into society, researchers have been looking more deeply into the sensitivity of the devices that serve the same function as hands. Human fingertips are remarkably sensitive. They can communicate details of an object as small as 40μm (about half the width of a human hair), discern subtle differences in surface textures, and apply just enough force to lift either an egg, or a 20 lb. bag of dog food without slipping. They can also manipulate objects with relative ease.

Engineers have been working to mimic this ability for eventual robotic or prosthetic uses with varying levels of success. At the University of Michigan, Prof. P.C. Ku and his group have recently reported an improved method for tactile sensing that detects directionality as well as force with a high level of sensitivity. The system’s high resolution makes it uniquely suitable for robotic and HCI applications. It is also relatively simple to manufacture.

“We are bridging the gap between humans and computers, so maybe we can teach a robot how to feel objects in a way that would be closer to our own capabilities,” said doctoral student Nathan Dvořák.

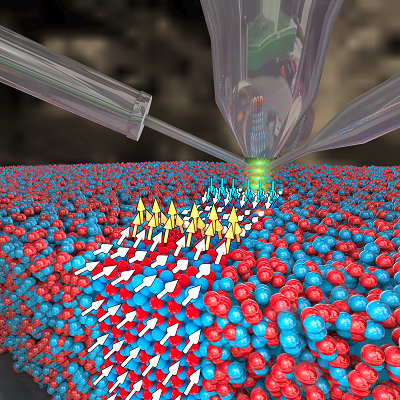

Dvořák is a member of a team led by Prof. P.C. Ku that have been developing tactile sensors for the past several years. They are the first to integrate a highly sensitive sense of touch along with directionality using asymmetric nanopillars – so a prosthetic device is able to more tightly grasp a falling object, or a human-computer interface can differentiate a rising from a falling motion.

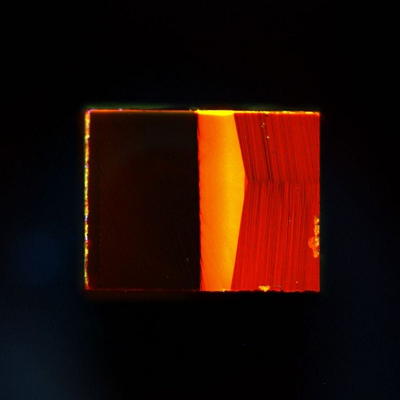

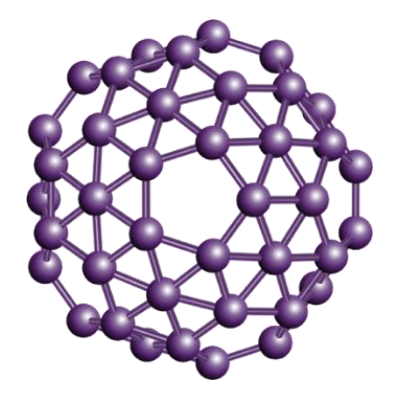

As a proof of concept, the team built a sensor, roughly the size of a fingertip, that contains 1.6 million gallium nitride (GaN) nanopillars. GaN was used because of its ability to measure force through its innate piezoelectric property, meaning its ability to generate an electrical charge when stressed.

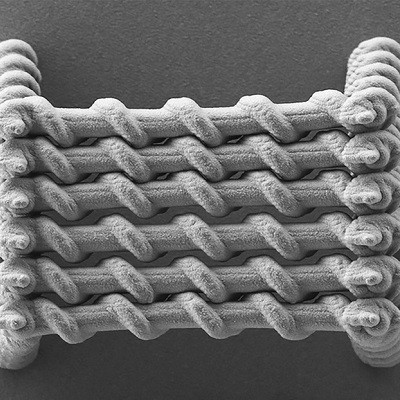

The elliptical shape and arrangement of the nanopillars are key to its success in being able to detect directionality.

The smallest unit is the nanopillar. Each nanopillar has an elliptical shape, and is 450nm tall, which is about 1,000x smaller than the width of human hair. And each nanopillar is equipped with its own LED.

The nanopillars are grouped into individual arrays in the shape of a rectangle, 100×150 nanopillars, or 12,500 nanopillars per array. Each array is then grouped in close proximity with a second array at right angles to it. This arrangement is key to its ability to detect direction. The two orthogonal arrays are called a node.

A complete sensor consists of 64 nodes in the shape of a square.

Because the sensor is able to determine the direction of the force, it can then alert a future prosthetic device about whether an object may be falling through its grasp, requiring a tighter grip.

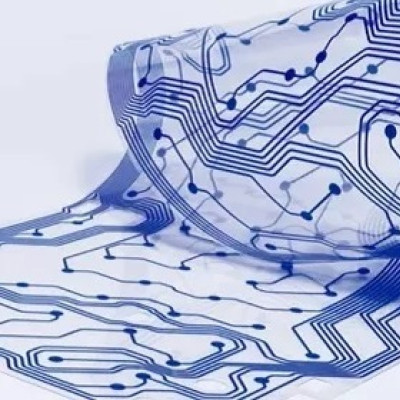

The system does not require complex electrical interconnects, which require very high manufacturing uniformity. It also uses well-known methods of manufacturing that are easily repeatable.

“And we don’t need to have 100% yield on our devices, or even close,” said Dvořák. “On one of my current devices there are 1.6 million nanopillars on the sensor, and it is still effective even if 25% of the nanopillars in an array are damaged during manufacturing, because we’re detecting the change of light intensity rather than the absolute light intensity.”

The sensor was able to discern objects measuring a mere 4.3μm, making it nearly 10x more sensitive than that of a human fingertip. And it could detect the weight of an object similar to a paperclip, or about 0.1 gram.

The current proof of concept uses an off-the-shelf imager to detect the change in light that occurs when the surface is touched.

“We are now working to develop a complete system,” said Dvořák. After making the current system work with electricity, he will mount the sensor on top of a CMOS imager which will record the changes in light intensity, and connect it to a microprocessor for automated information processing.

Read the original article on University of Michigan.