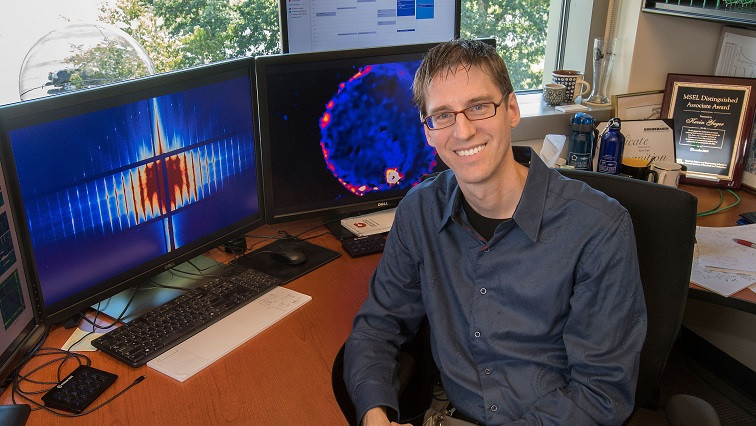

Kevin Yager—leader of the electronic nanomaterials group at the Center for Functional Nanomaterials (CFN), a U.S. Department of Energy (DOE) Office of Science User Facility at DOE’s Brookhaven National Laboratory—has imagined how recent advances in artificial intelligence (AI) and machine learning (ML) could aid scientific brainstorming and ideation. To accomplish this, he has developed a chatbot with knowledge in the kinds of science he’s been engaged in.

Rapid advances in AI and ML have given way to programs that can generate creative text and useful software code. These general-purpose chatbots have recently captured the public imagination. Existing chatbots—based on large, diverse language models—lack detailed knowledge of scientific sub-domains. By leveraging a document-retrieval method, Yager’s bot is knowledgeable in areas of nanomaterial science that other bots are not. The details of this project and how other scientists can leverage this AI colleague for their own work have recently been published in Digital Discovery.

Rise of the Robots

“CFN has been looking into new ways to leverage AI/ML to accelerate nanomaterial discovery for a long time. Currently, it’s helping us quickly identify, catalog, and choose samples, automate experiments, control equipment, and discover new materials. Esther Tsai, a scientist in the electronic nanomaterials group at CFN, is developing an AI companion to help speed up materials research experiments at the National Synchrotron Light Source II (NSLS-II).” NSLS-II is another DOE Office of Science User Facility at Brookhaven Lab.

At CFN, there has been a lot of work on AI/ML that can help drive experiments through the use of automation, controls, robotics, and analysis, but having a program that was adept with scientific text was something that researchers hadn’t explored as deeply. Being able to quickly document, understand, and convey information about an experiment can help in a number of ways—from breaking down language barriers to saving time by summarizing larger pieces of work.

Watching Your Language

To build a specialized chatbot, the program required domain-specific text—language taken from areas the bot is intended to focus on. In this case, the text is scientific publications. Domain-specific text helps the AI model understand new terminology and definitions and introduces it to frontier scientific concepts. Most importantly, this curated set of documents enables the AI model to ground its reasoning using trusted facts.

To emulate natural human language, AI models are trained on existing text, enabling them to learn the structure of language, memorize various facts, and develop a primitive sort of reasoning. Rather than laboriously retrain the AI model on nanoscience text, Yager gave it the ability to look up relevant information in a curated set of publications. Providing it with a library of relevant data was only half of the battle. To use this text accurately and effectively, the bot would need a way to decipher the correct context.

“A challenge that’s common with language models is that sometimes they ‘hallucinate’ plausible sounding but untrue things,” explained Yager. “This has been a core issue to resolve for a chatbot used in research as opposed to one doing something like writing poetry. We don’t want it to fabricate facts or citations. This needed to be addressed. The solution for this was something we call ‘embedding,’ a way of categorizing and linking information quickly behind the scenes.”

Embedding is a process that transforms words and phrases into numerical values. The resulting “embedding vector” quantifies the meaning of the text. When a user asks the chatbot a question, it’s also sent to the ML embedding model to calculate its vector value. This vector is used to search through a pre-computed database of text chunks from scientific papers that were similarly embedded. The bot then uses text snippets it finds that are semantically related to the question to get a more complete understanding of the context.

The user’s query and the text snippets are combined into a “prompt” that is sent to a large language model, an expansive program that creates text modeled on natural human language, that generates the final response. The embedding ensures that the text being pulled is relevant in the context of the user’s question. By providing text chunks from the body of trusted documents, the chatbot generates answers that are factual and sourced.

“The program needs to be like a reference librarian,” said Yager. “It needs to heavily rely on the documents to provide sourced answers. It needs to be able to accurately interpret what people are asking and be able to effectively piece together the context of those questions to retrieve the most relevant information. While the responses may not be perfect yet, it’s already able to answer challenging questions and trigger some interesting thoughts while planning new projects and research.”

Bots Empowering Humans

CFN is developing AI/ML systems as tools that can liberate human researchers to work on more challenging and interesting problems and to get more out of their limited time while computers automate repetitive tasks in the background. There are still many unknowns about this new way of working, but these questions are the start of important discussions scientists are having right now to ensure AI/ML use is safe and ethical.

“There are a number of tasks that a domain-specific chatbot like this could clear from a scientist’s workload. Classifying and organizing documents, summarizing publications, pointing out relevant info, and getting up to speed in a new topical area are just a few potential applications,” remarked Yager. “I’m excited to see where all of this will go, though. We never could have imagined where we are now three years ago, and I’m looking forward to where we’ll be three years from now.”

For researchers interested in trying this software out for themselves, the source code for CFN’s chatbot and associated tools can be found in this github repository.

Read the original article on Brookhaven National Laboratory.