Every search engine query, every AI-generated text and developments such as autonomous driving: In the age of artificial intelligence (AI) and big data, computers and data centres consume a lot of energy. By contrast, the human brain is far more energy-efficient. In order to develop more powerful and energy-saving computers inspired by the brain, a research team from Materials Science and Electrical Engineering at Kiel University (CAU) has now identified fundamental requirements for suitable hardware. The scientists have developed materials that behave dynamically in a similar way to biological nervous systems. Their results have been published in the journal Materials Today and could lead to a new type of information processing in electronic systems.

Processing information dynamically instead of serially

"Computers process information serially, whereas our brain processes information n parallel and dynamically. This is much faster and uses less energy, for example in pattern recognition," says Prof Dr Hermann Kohlstedt, Professor of Nanoelectronics and spokesperson for the Collaborative Research Centre 1461 "Neurotronics” at Kiel University. The researchers want use nature as a source of inspiration for new electronic components and computer architectures. Unlike conventional computer chips, transistors and processors, they are designed to process signals in a similar way to the constantly changing network of neurons and synapses in our brain.

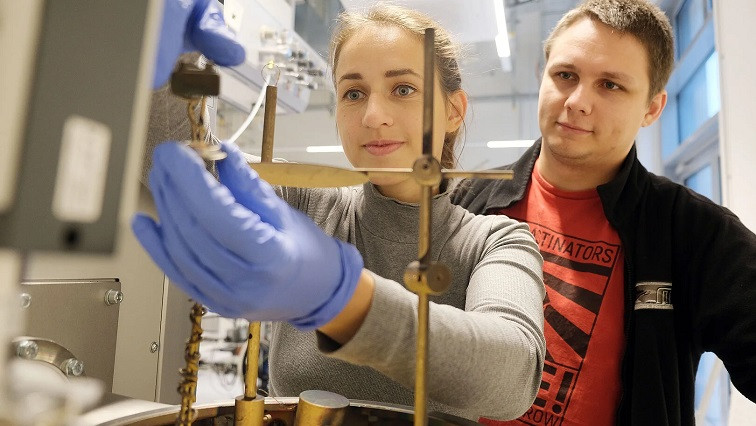

“But computers are still based on silicon technology. Although there has been impressive progress in hardware in terms of xy, networks of neurons and synapses remain unrivalled in terms of connectivity and robustness," says Dr Alexander Vahl, a materials scientist. Research on new materials and processes is needed to be able to map the dynamics of biological information processing.

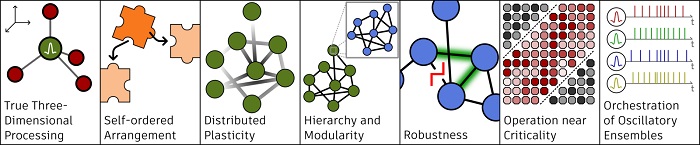

The research team therefore focussed on developing materials that behave dynamically in a similar way to three-dimensional biological nervous systems. "Dynamic" is created here by the fact that the arrangement of atoms and particles in the materials can change. To this end, the researchers have identified seven basic principles that computer hardware must fulfil in order to function similarly to the brain. These include, for example, a certain degree of changeability: The so-called plasticity of the brain is a requirement for learning or memory processes. The materials the researches developed in response to this fulfil several of these basic principles. However, the "ultimate" material that fulfils everything does not yet exist.

Beyond classic silicon technology

"When we combine these materials with each other or with other materials, we open up possibilities for computers that go beyond traditional silicon technology," says Prof. Dr. Rainer Adelung, Professor of Functional Nanomaterials. "Industry and society need more and more computing power, but strategies such as the miniaturisation of electronics are now reaching their technical limits in standard computers. With our study, we want to open up new horizons".

As an example Maik-Ivo Terasa, a doctoral researcher in materials science and one of the study's first authors, describes the unusual behaviour of the special granular networks developed by the research team: "If we produce silver-gold nanoparticles in a certain way and apply an electrical signal, they show special properties. They are characterised by a balance between stability and a rapid change in their conductivity". In a similar way, the brain works best when there is a balance between plasticity and stability, known as criticality.

In three further experiments, the researchers showed that both zinc oxide nanoparticles and electrochemically formed metal filaments can be used to change the network paths via the electrical input of oscillators. When the research team coupled these circuits, their electrical signal deflections synchronised over time. Something similar happens during conscious sensory perception with the electrical impulses that exchange information between neurones.

Basic principles of biological information processing

In the brain, information is passed on via a dynamic, three-dimensional (1) network of neurons and synapses, which rearrange constantly and self-orderedly (2). This so-called spatial plasticity (3) is considered a requirement for learning and memory processes and therefore also for computational performance based on biological models. In addition, biological nervous systems have a hierarchical and modular structure (4) consisting of smaller networks and longer connections. This makes them robust (5) against minor disturbances, as they can easily compensate for them. The brain works best in a state close to criticality (6), the balance between plasticity and stability. During the sensory perception of the environment, the electrical impulses of the neurons are synchronised (7).

Read the original article on Kiel University.